What Determines a Limit to Growth? “Planet Earth Next 100 Years”

All Global Research articles can be read in 51 languages by activating the “Translate Website” drop down menu on the top banner of our home page (Desktop version).

To receive Global Research’s Daily Newsletter (selected articles), click here.

Visit and follow us on Instagram at @crg_globalresearch.

***

This is an article version of a class Cynthia Chung gave for the symposium “The Earth Next 100 Years” which can be viewed here.

***

This might seem like a rather ignorant or simplistic question to some. Many will think the answer rather obvious, that the Limit to Growth is determined by limited resources, of course!

However, it turns out this answer is not so simple when we start to ask ourselves some basic follow-up questions. For instance, what determines the limit to a resource? Is a specific resource something that is fixed in its amount, in its availability on Earth that remains unchanged for all time?

That is, can a resource only be used up or can it also be reproduced, regenerated?

Obviously, if a resource can be regenerated, then the “limit” to that specific resource will be determined by the rate it is used vs. the rate it is able to be replenished.

Click below to access video (available)

All resources that are essential to life, including human life, are naturally renewable. There is a natural cycle that causes these resources to be regenerated, this includes the natural cycles of water, oxygen, vegetation (including food) and so forth. Thus, everything that is essential for life is already naturally renewable on Earth.

The concern is thus, that we are using these resources too quickly with the rate of human population growth, such that these renewable resources will become scarce, causing us to reach a point where the collapse of civilization and rapid depopulation will be inevitable due to extreme scarcity in these essential resources. Not to mention the effects this will have on other life forms on Earth.

This point of catastrophe is calculated as the point when the human population will exceed its carrying capacity. However, what determines the carrying capacity?

Thomas Malthus himself, who was the one to create the Malthusian growth model, never actually specified an exact number for when the human population would hit its carrying capacity. This was because it was understood that the carrying capacity is not something fixed but could be increased or decreased depending on human-made innovations, such as agriculture. Thomas Malthus did however, make the prophecy that we would hit our carrying capacity by 1890, about 100 years from the time he made the prediction, which needless to say was very much off the mark.

[It should be noted that Malthus was fully convinced that his prophecy was accurate and that the only way to avoid such a catastrophe was to severely curb the growth of the human population immediately. This included the denial of medical care and food to the needy since it was thought by the followers of Malthus, that the postponement of their death would only use up further resources without any contribution to society. Sounds a little familiar doesn’t it?]

The reason why Malthus was so far off the mark was because such a point in the future concerning the human carrying capacity, cannot be determined by a linear extrapolation. This is because as already mentioned, human innovations change our relationship to the resources we use in a qualitative manner and not just a quantitative manner. Qualitative change has always been the mathematician’s nightmare in producing models that will supposedly predict trends in the future. How can a mathematical model predict all qualitative change that will happen in the future, is it even possible? (For more on this refer to my paper “The Curse of Game Theory: Why it is in Your Self-Interest to Exit the Rules of the Game“).

This is something really important to keep in mind. There is no specific agreed upon number that when the human population reaches x this will be our carrying capacity. Such a number can only be fixed if we were to have absolutely no change in our societies alongside a growing population.

But we do have change, and that is everything.

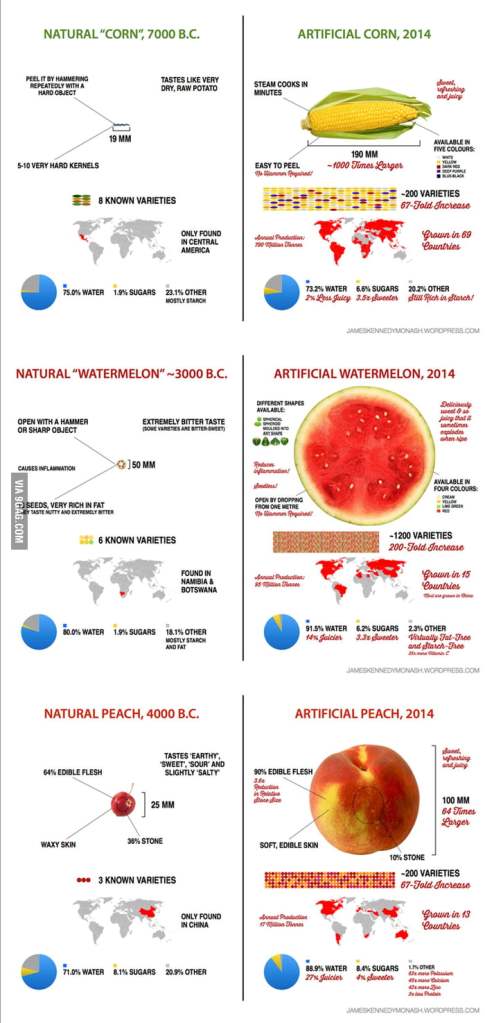

Human innovations have so far, and continue, to not only increase the quantity of resources produced, but we have also made qualitative changes such as increasing diversity and efficiency in the biosphere itself. For instance, much of the basic foods we eat today, were created through human-made innovations, such as corn, apple, watermelon, banana etc.

Even livestock did not exist in the form they do today if it had not been for human-made innovations. These innovations go back centuries, well before we knew anything about the genetic domain. The ability to not only grow our own food but to increase its efficiency in nutrition is a very important ability that has allowed for the sustaining of more people on Earth.

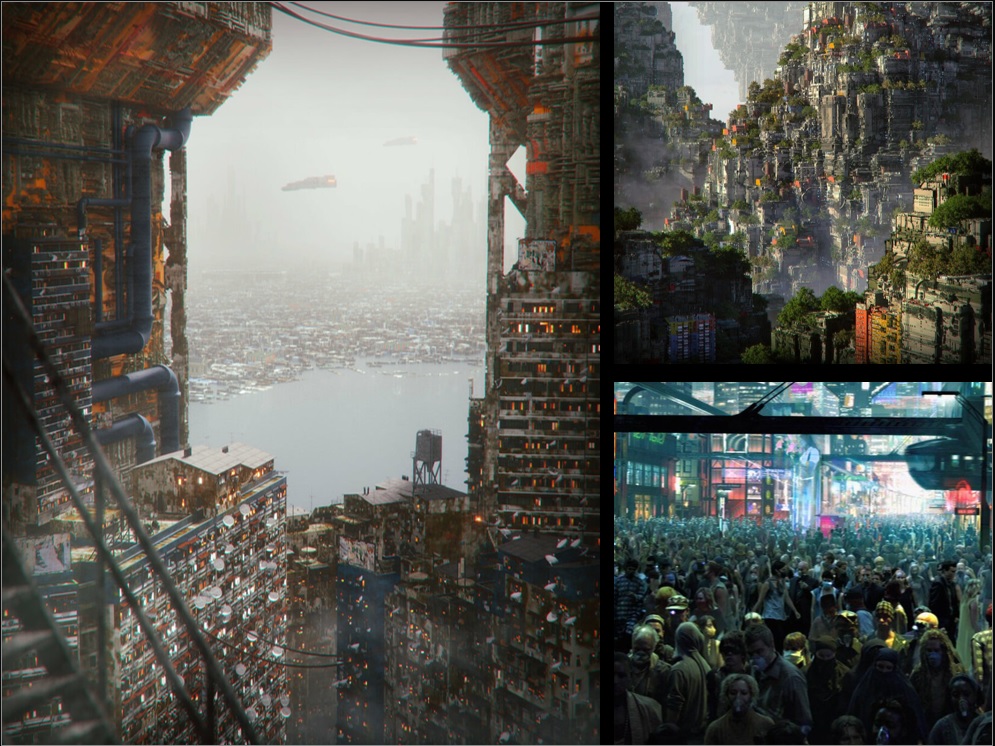

There is also the concern over limited space. We have a finite amount of space on Earth, this is true, and thus there is only so much space to support a decent standard of living, and in harmony with other life forms on Earth. Many think we are fast approaching the dystopic visions that have been heavily pumped into our stream of consciousness, such as these.

Picture to the right is of what a future apartment is thought to look like in the dystopic futuristic vision of the movie “Fifth Element.”

Isn’t this inevitable? After all there is only so much room, and the poor will only be able to afford the smallest living spaces as land becomes an increasingly scarce resource. Not to mention, how will this affect our capability to grow food if space becomes severely limited? Can any kind of ecosystem or habitat coexist with us at that point?

This will be difficult for many to wrap their heads around, since not only are such dystopic predictions everywhere but also, there is a very real problem with how some modern cities are choosing to build themselves.

Let us first deal with the question of how much space we currently have, and then move onto this question of how cities should choose to organize themselves such that standard of living can actually increase with population growth rather than decrease.

Here is a rather extreme example, but I think it communicates the point effectively in terms of the sheer mass of the entire human population in context to the size of the world. If we were to amass the entire world population, it would not even come close to filling up the Grand Canyon. We could pile everyone into one big pile into the Grand Canyon which would look like the picture above.

Here is a more humane example, where we can fit the entire human population (at 7.4 billion) all into one-story townhouses back to back into just the state of Texas, we wouldn’t even need to build two-story townhouses. So, we are nowhere near running out of actual space presently and it won’t be until much much further in the future that such a thing would start to become a concern, and by then, who knows what kind of capabilities we will have. Suffice to say, it is not a crisis now, and won’t be for hundreds of years if ever.

Another concern is will we run out of space for food production?

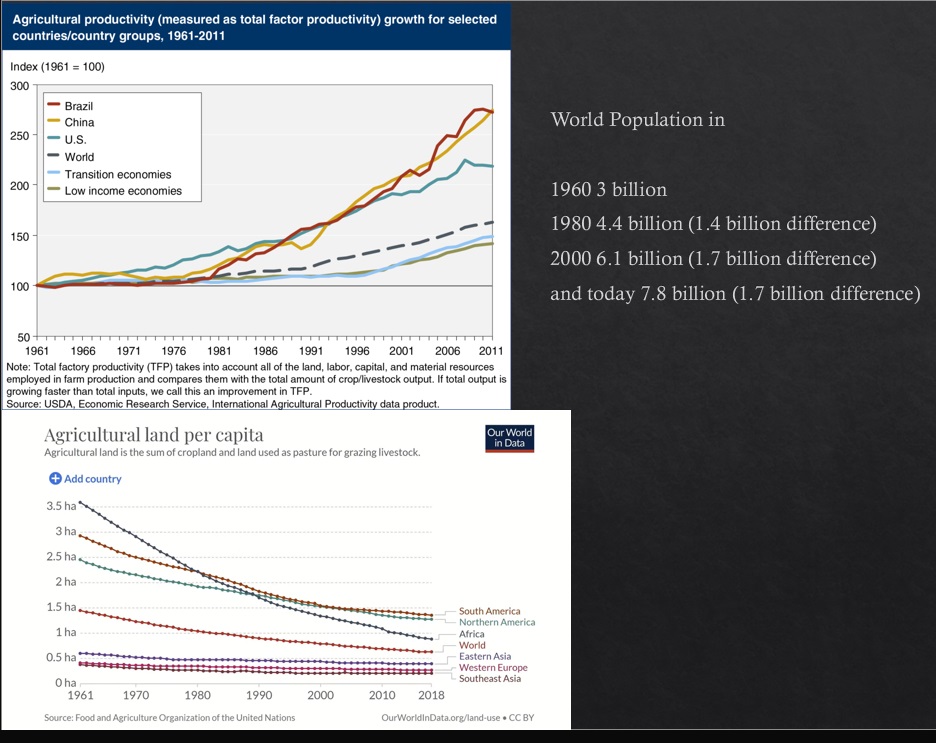

According to the USDA Economic Research Service on international agricultural productivity, the world is increasing its rate of food productivity in relation to the rate of human population growth (see graph below). And according to Our World in Data, the amount of space required to grow our food is decreasing, due to increased efficiency (see graph below).

Looking at the USDA graph we can see, that in comparison to the population growth between 1960, 1980, 2000 and today, our rate of food production has been increasing.

Thus, not only do we have more than plenty of space to fit the human population on Earth, but we also find that world food production is increasing, in relation to population growth, and the amount of space required to produce this food has decreased.

So we are presently nowhere near hitting our carrying capacity.

What we are seeing presently are a lack of essential resources such as food and water in localised regions. There is not a shortage of food production occurring, we are and do have the capability of producing enough food for the entire world population. The reason why certain areas are suffering from critical shortages are due to political and economic reasons, which I will get a little into later on.

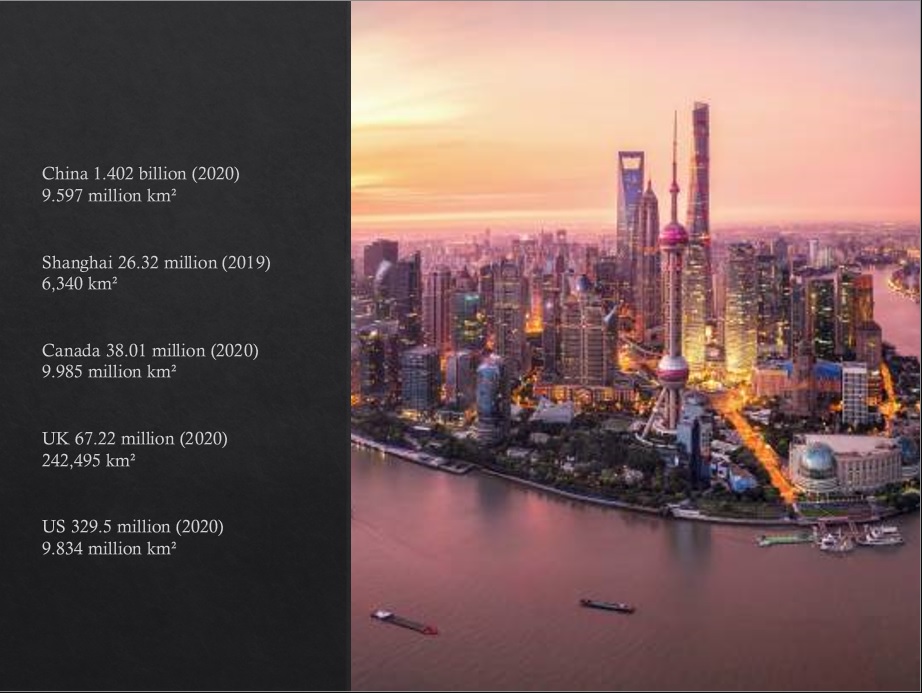

In terms of the argument for overcrowding in certain cities of the world, as you can see above, India, eastern Asia (primarily China) and also Nigeria, Ethiopia, and the Democratic Republic of Congo in Africa are among the most densely populated areas in the world.

The common conception is that if you have that sort of population density, you have no choice but to have overcrowding in your cities. However, as we saw with the previous example, if we can fit the world population into Texas with a townhouse each, then the top countries in population size are not really overcrowded. The issue is more so, especially in the areas of Africa and India, a question of living standard. It is also a question of how cities with large populations are organized.

Shanghai is the most populated city in China, with a population close in size to the entirety of Canada, it is about a third the size of the entire population of UK. With such a population density many in the West, who are not used to such things, think the living standard must be a lot lower.

However, what we would see if we actually visited Shanghai, is that it is actually quite a nice city. Above is a picture of Century Park, one of the many parks in Shanghai.

Let us look at another example.

Beijing is the capital of China, and also holds quite a large population density.

Above are the plans for the renovation of Chaoyang park, which already exists, but to which the Chinese government wants to beautify further and add more trees and so forth. To the left we can see the plan they have to incorporate more trees in between buildings and along the streets.

China, actually, takes it very seriously to have a balance between their rapid city growth and the need to include parks and trees so that there is also a connection to nature. If you have ever been to China, this is one thing that immediately stands out.

Many of these parks are not the average run of the mill either. They are made following the philosophy of feng shui which includes the idea that a park should invoke deeper reflection and a profound connection to Nature.

Nanjing is a good example of a city in China that is full of very thought-through and beautiful parks.

Parks in Nanjing

In comparison, let us look at what the population density for Canada looks like.

Needless to say, we are nowhere near “running out of space” for people. And China has shown that cities can still be beautiful with an increasing living standard alongside a high population density.

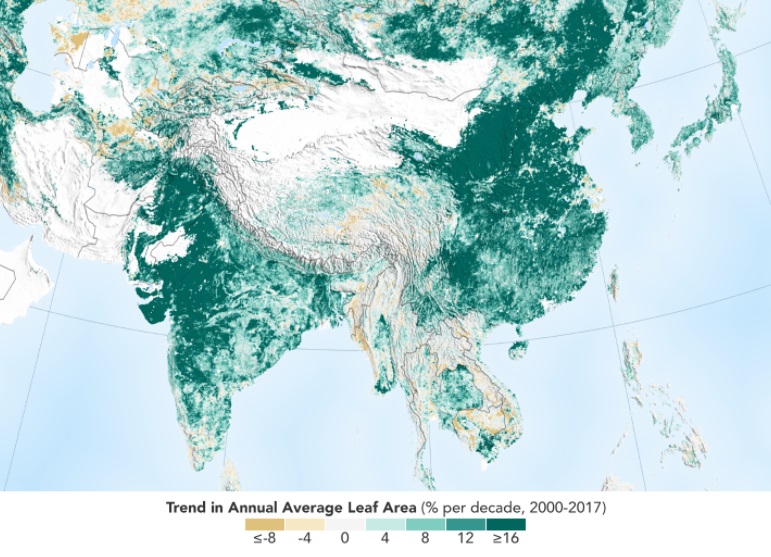

In addition, we see that countries such as China and India with among the highest population densities, also are among the greenest regions on Earth. This has largely been due to China and India’s decision to implement man-made forests. This was done to address pollution problems, since trees are excellent air purifiers. They also happen to be great consumers of C02. Large regions of greenery also make for excellent temperature control, much of these areas were previously desert. By invoking better temperature control, we also decrease the occurrence of extreme weather phenomena (for more on this refer to another lecture of mine). This also shows that large ecosystems can thrive in regions with high population densities.

Another fear that is often heard is that drinkable water will become a scarce resource which will cause us to have water wars in the future.

This is again, a situation where if we chose to do absolutely nothing and make no changes or innovations then this would be a possibility, just like with any resource.

Presently there is over 16 million square km in area that make up the world desert. About 2/3 of the world is desert, with the Sahara making up 9.2 million square km of this area (though as we saw with the previous NASA world map, the earth is greener than it was 20 years ago and continues in this direction thanks to man-made forests).

Planting green vegetation is an excellent way to keep water inland and not lose it to the oceans. It is also, as already mentioned, excellent at regulating global temperature and for temperature control reducing extreme fluctuations in climate. For example, the Sahara desert is responsible for a lot of the hurricane activity that hits the eastern coast of the United States. This is due to the rapid temperature change from very hot to very cool that air currents experience when traveling over the Sahara followed by the Atlantic Ocean.

Not only is 2/3 of the world desert, but grassland regions are presently experiencing desertification, and are a major cause in water and food shortage in those localized regions. In addition there is a lack of clean water leading to further sickness and disease.

Allan Savory is someone who has talked extensively about a rather simple and cost effective solution to this problem (his Ted Talk presentation can be viewed here). His technique (which is actually just the return to an age old practice) has shown great success in former desert regions of Africa, the United States and Mexico.

Comparison of two nearby regions in Zimbabwe on the same day, one to the right managed by Allan Savory’s holistic management.

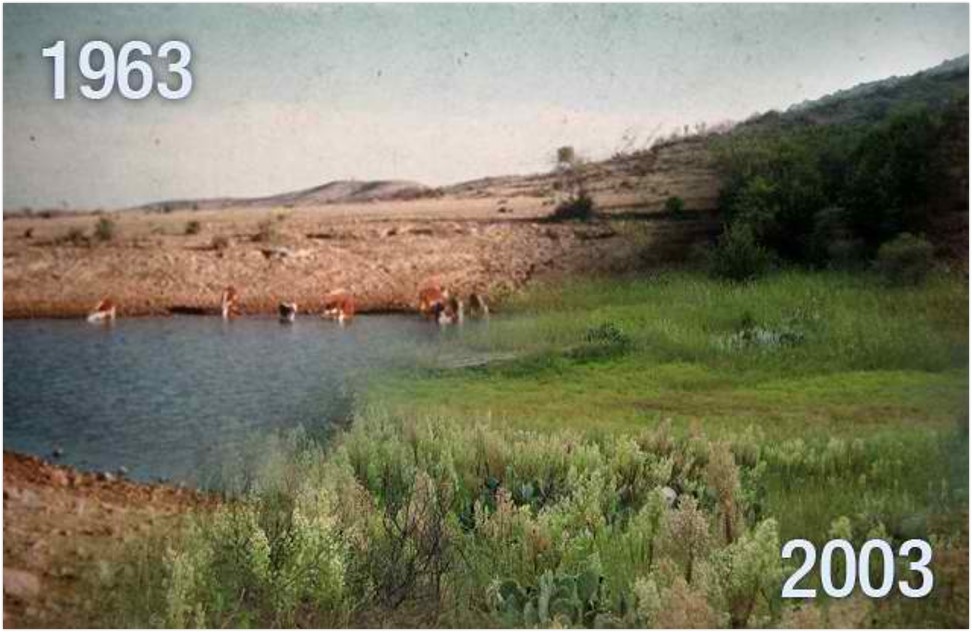

Before and After picture of same land region in Mexico after decades of using Allan Savory’s approach.

Before and After picture of same land region in Mexico after decades of using Allan Savory’s approach.This was accomplished by Allan Savory and his team by using rotation of herd grazing in order to stimulate plant growth. This allowed for old plant life (that acts sort of like necrotic tissue) to be trampled on by the passing herd such that the soil can breathe more easily and is at the same time fertilized by manure. For more on the details of this refer to his lecture.

Another exciting prospect for the future is weather control, through the controlled ionization of river systems in the sky!

Atmospheric ionization systems have been successfully used to increase precipitation, and effect associated weather processes. This has been done in multiple locations around the world, cumulatively for three decades. With further development of these technologies, droughts around the world could be overcome in a completely new way: by the management of the water resources of the sky!

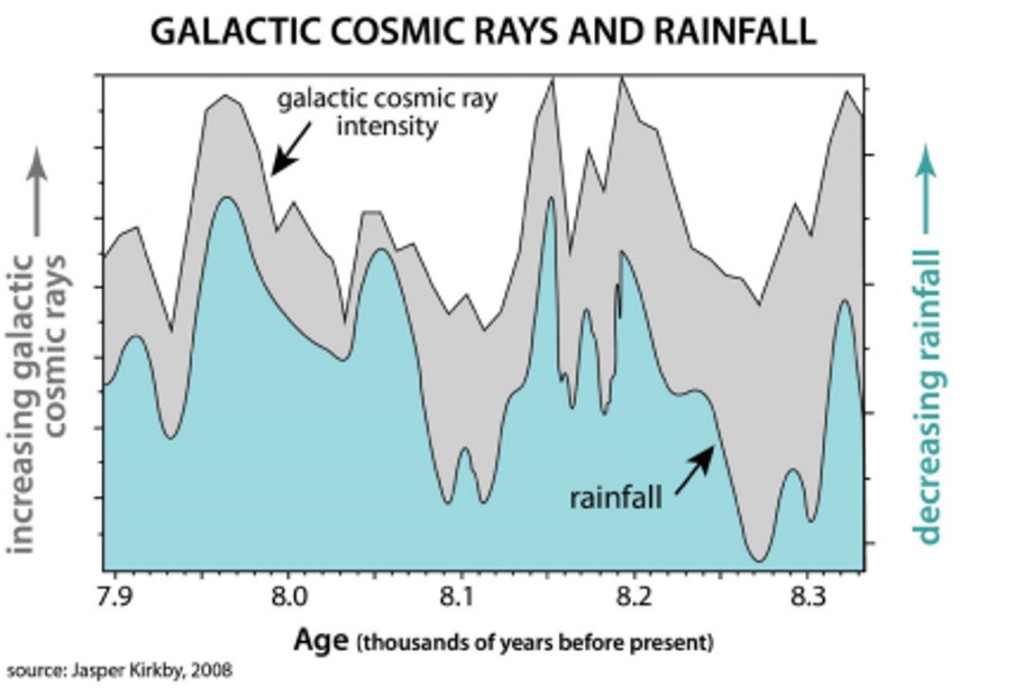

It has been reported in multiple studies that periods of low solar activity, and high galactic influence (that is cosmic rays) affect the amount of rainfall and also the amount of glaciation formation.

When we are in different galactic environments we see changes in our climate system. We also experience temperature change depending on whether we are moving above or below the galactic plane, as pictured below. Thus, through observations of many studies, we are finding that galactic processes dictate how climate and weather are expressed on Earth.

A study by Pérez & Peraza found that modulation of fluxes of galactic cosmic rays could also be the cause of Earth’s periods of ice ages. And that it could be connected with movement of our Solar System within the arms of our spiral galaxy as depicted in the above picture. They hypothesized that when the Solar System is inside the arms, there is more dust, so there is less flux of the galactic cosmic rays. And between the arms, we have the larger fluxes of the galactic cosmic rays. And these periods coincide, temporally, with periods of increased and decreased temperature of our planet.

Rainfall was produced in Mexico by using an iron mast that is connected by thin wires to peripheral towers. If for example, you put positive potential on this installation, the positive ions will be moved by the electric field up to the upper layers; and moving to the upper layers, they gain more and more water molecules and become nuclei to form clouds.

If you put your installation near the seashore—you can collect the humidity and then transport it, because you can put the different potentials (one positive the other negative) between two installations. This creates movement of the air, which is filled by these nuclei for the formation of clouds inland.

This thus helps to move air filled with humidity inland for cloud formation and rainfall further inland.

Sergey Pulinets has had impressive results using this process, and was able to fill up three dams in Mexico using this technology after one and a half years. They were even able to fight off forest fires in the Yucatan Peninsula, creating artificial rain using this technology, which yielded about 20-30% increase in precipitation.

What this technology has shown us is that we live in a constant electric field which exists between the ionosphere and the ground; the potential difference between the ionosphere and the ground which is the source for much weather formation, including cloud formation and thunderstorms as well as other weather phenomena.

The above picture looks like something out of a sci fi movie, but in fact, is a rather natural occurrence. These phenomena are referred to as Sprites or Red Sprites which are large-scale electric discharges that occur high above thunderstorm clouds, giving rise to a quite varied range of visual shapes flickering in the night sky. They are usually triggered by the discharges of positive lightning between an underlying thundercloud and the ground.

Thus Pulinets makes the point, their ionization technology uses everything that is given to us by nature, helping a little bit with this ionization to create additional centers of nucleation.

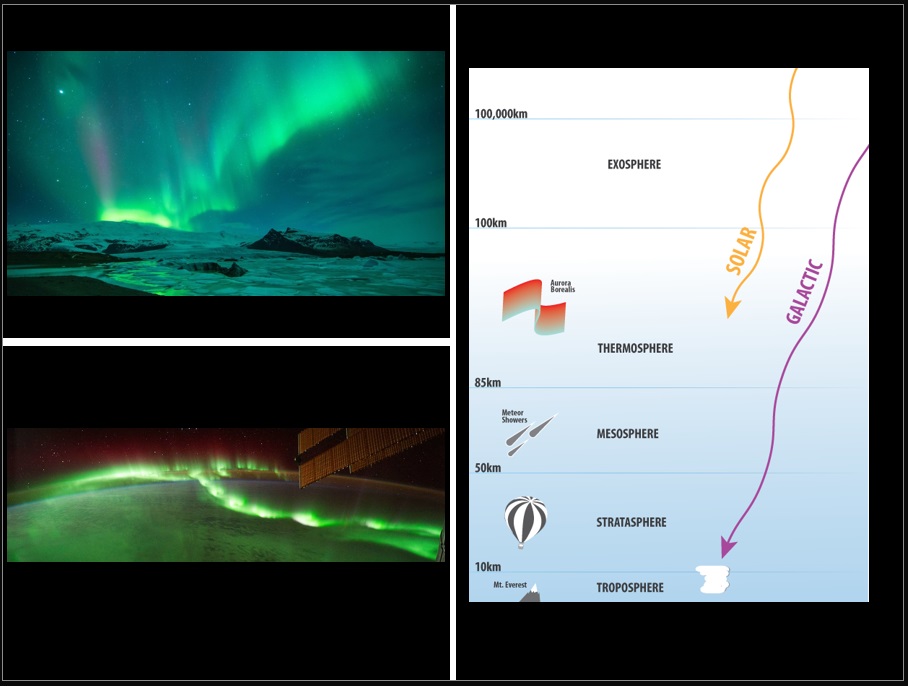

We have found through studies that the Sun, as well as high-energy radiation (such as cosmic rays) from the galaxy, are actually a constant input shaping the environment of the atmosphere, affecting climate, weather and how water moves through the water cycle.

Thus Pulinets’ ionization technology, is actually acting in a very similar fashion to the ionizing effects of the galaxy.

That is, both the sun and galactic cosmic rays (which have much higher energy) are the only known sources of ionization. This is the source of the Northern Lights. They excite the molecules, and atoms. In the case of the Northern Lights, its is oxygen and nitrogen particles that are excited, which cause us to see the green and red lines of the polar lights.

As we gain a better understanding of our galactic processes, we will also gain further understanding and control on how to control weather formation. In the future this will likely be the cheapest and easiest way to green deserts.

C02 generators are forms of technology used today to grow vegetation in greenhouses and show much promise in their use for space travel, they can pump up to 1500 parts per million of C02 and the average C02 in the earth’s atmosphere is 400 parts per million.

C02 generators offer a lot of promise in producing yield in typically uninhabitable regions such as Antarctica or in space such as on the Moon or Mars.

So, where did the domineering concept of “Limits to Growth” that still penetrates the consciousness of present day policies come from?

In 1968, the Club of Rome, a think tank of an elite membership, was founded to address the problems of mankind. It was concluded in their extremely influential book titled “The Limits to Growth,” published in 1972, that such problems could not be solved on their own terms and that all were interrelated. It should also be made known to the reader that the Club of Rome was involved at nearly the inception of the World Economic Forum (WEF). It was clear that the conclusions found by the Club of Rome were meant to dictate policy amongst the global elite through the WEF venue.

Rather timely (or perhaps conveniently), one year after the publication of “The Limits to Growth,” in 1973, Australia’s largest computer made shockwaves predicting future trends such as pollution levels, population growth, availability of natural resources and quality of life on earth. ABC’s This Day Tonight aired the story on 9 November, 1973, which can be viewed below.

As the heading above summarizes, this super computer apparently predicted when the end of civilization would occur. Depending on the parameters it was given (it is after all only a computer), the year as to which civilization would end would change, but one thing was consistent, that no matter what year, civilization was most definitely on a course to its doom.

Let us look at a few of these predictions shall we?

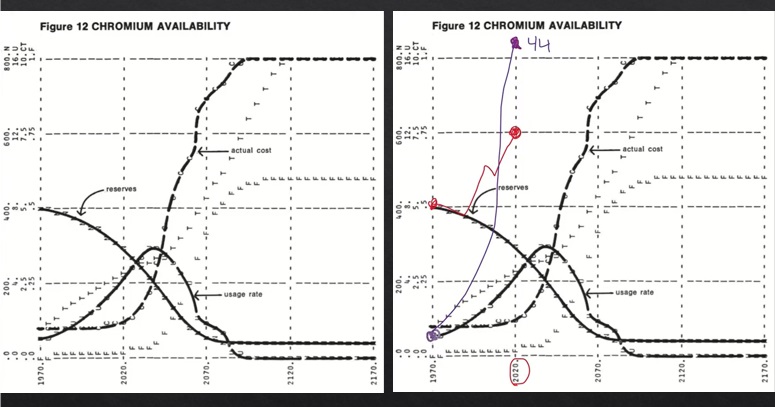

Here is a rather simple example of a prediction in chromium availability that was made by this super computer in Australia. If we look at the chart on the left, we see that according to the computer, the reserves of chromium will decrease as usage rates increase such that approximately 50 years into the future (pretty much where we are today in 2021) we should see a spike in actual cost of chromium. This spike in cost will decrease usage rates until no one can any longer afford to pay for the cost of chromium as its reserve levels reach a low.

However, if we look at the chart to the right (that was updated, by Jason Ross with the Schiller Institute, to what has actually occurred up to present day), we see that the reserves of chromium were decreasing up till the mid 1970s but then increased drastically to a much higher level. This in turn has affected the usage rates of chromium such that they are so high they cannot be shown on the graph, and are about 2.5 charts higher.

Suffice to say this super computer prediction of future trends for just ONE resource, chromium, was way WAY off!

With this in mind, let us look at some of the more general and much more complex predictions this super computer made for us.

Here we see four different parameters given to the super computer. Figure 35 shows the standard prediction of what the resource availability was in 1973. The next graph, figure 36, accounts for if these reserves were actually double what we assume them to be (this is key here since it acknowledges that such a thing is not really known but rather estimated). Figure 39 accounts for if resources were unlimited with pollution control. And figure 42 accounts for if resources were unlimited with pollution controls, increased food production and “perfect” birth control.

In each of the four scenarios, the doom of human civilization occurred during a different decade, but all occurred before the year 2100.

In the case of the first scenario, resources would be depleted to such a point that it would cause the plummet of food production and industrial growth, population growth would continue to increase until it was completely unsustainable and then it would collapse with nothing to fall back on. In the second scenario, with double the resources, pollution would increase to such a point that it would eventually lead to the death of the human population. In the third scenario, with unlimited resources and pollution controls, there would be a shortage of food unable to sustain the growing human population. In the fourth scenario, with unlimited resources, pollution controls, increased food production and “perfect” birth control, it appears industrial growth has caused an increase in pollution despite the pollution controls, which in turn causes food production levels to drop which causes the human population to collapse?

So it appears the moral of the lesson is that if we do not curb our industrial growth, no matter what sort of controls or increase in resources we gain, we will be headed for a collapse point in the somewhat near future.

The highly problematic chromium prediction made by this super computer is a very good indication of why these predictions are not, and will not be accurate for anything that occurs in the future. The reason for this being, that they cannot account for any sort of change, most importantly qualitative change.

We should also not be naïve that such a policy outlook is not wholly separated from the realm of politics.

In 1991, Club of Rome co-founder Sir Alexander King stated in the “The First Global Revolution” (an assessment of the first 30 years of the Club of Rome) that:

“In searching for a common enemy against whom we can unite, we came up with the idea that pollution, the threat of global warming, water shortages, famine and the like, would fit the bill. In their totality and their interactions these phenomena do constitute a common threat which must be confronted by everyone together. But in designating these dangers as the enemy, we fall into the trap, which we have already warned readers about, namely mistaking symptoms for causes. All these dangers are caused by human intervention in natural processes, and it is only through changed attitudes and behaviour that they can be overcome. The real enemy then is humanity itself.” [emphasis added]

This is the moral of the lesson that is very clearly being enforced today, that the real enemy is humanity itself, where we are told to see ourselves only as akin to a virus which consumes its host Earth.

But what if our character does not lie in such a view? What if our character is actually more akin to that of a builder, a creator?

Everything that lives on this Earth consumes, but as we appreciate in any ecosystem, it also gives in return for what it takes. Is it possible that humans have a natural role to play in all of this?

Let us look at some of these qualitative changes we are presently experiencing that will prove to completely change the playing field this super computer thought it was operating in.

Here is a graph made by RTF colleague Cuautemoc Reale-Hernandez, who is a nuclear engineer, and delivered a wonderful RTF class on nuclear energy titled “Atomic Physics and Macrophysics: How Breakthroughs in the Atom Affords Us Freedom to Explore Space.“

The graph shows the global distribution of electricity (China has certainly surpassed UK, Italy and Spain at this point since the data comes from six years ago and China has been making rapid progression in its energy production). What this means is that the greater majority of the world would need to produce one nuclear reactor every 3 days for 30 years to catch up to the level of 900 W/person that we enjoy in North America and South Korea.

If we look at the social progress index vs energy per country, we can see very clearly that energy is the leading factor in standard of living in a country.

Nuclear power is the greatest source of energy density we presently have available.

Here we see Cuautemoc holding a lead pellet that is equivalent in size to 300g of uranium. This one little pellet has the capability to power the energy needs of one person living in a first world country for 80 years. The price of this pellet of uranium is $60/kg.

Presently the concern over fission nuclear power is the waste factor. One very effective solution to this that already exists are Breeder Reactors.

For further details watch Cuautemoc’s RTF lecture Atomic Physics and Macrophysics: How Breakthroughs in the Atom Affords Us Freedom to Explore Space

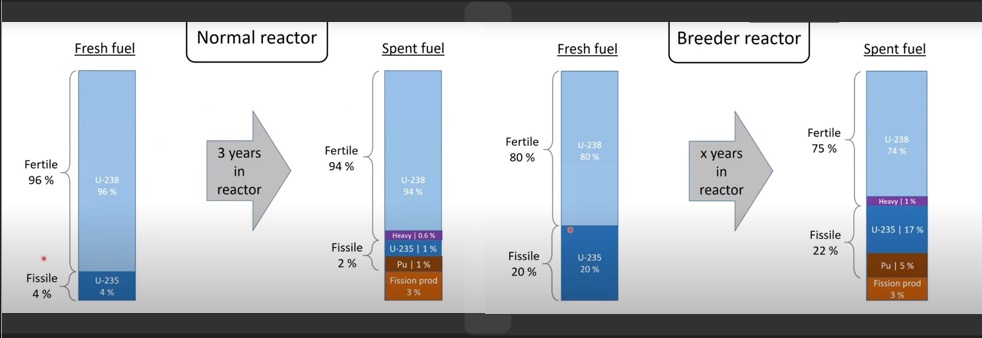

In a normal nuclear fission reactor as seen on the left, it requires at least 4% of fissile fuel (ready for reaction) vs. fertile fuel U-238 which requires a reaction with a neutron to produce the fissile fuel U-235, which is then ready for a fission reaction. After approximately three years, the percentage of fissile fuel is too low to continue the cycle. At this point, the spent fuel typically consists of about 0.6% of heavy isotopes known as transuranic elements, which are the most dangerous component of spent fuel since it can stay radioactive for thousands of years. These heavy isotopes can undergo further reactions that allow them to eventually undergo fission.

The fission waste products which we see amount to about 3% of the spent fuel, are much less radioactive and have many useful applications such as in the field of medicine.

Once the fuel is spent in these normal nuclear fission reactors, it is stored, since it can still be used but it requires a different reactor set up. Breeder reactors are presently something that currently exists that can process spent fuel.

What Breeder Reactors use in their fresh fuel is 20% fissile and 80% fertile such that by x number of years, they still have a large percentage of fissile fuel.

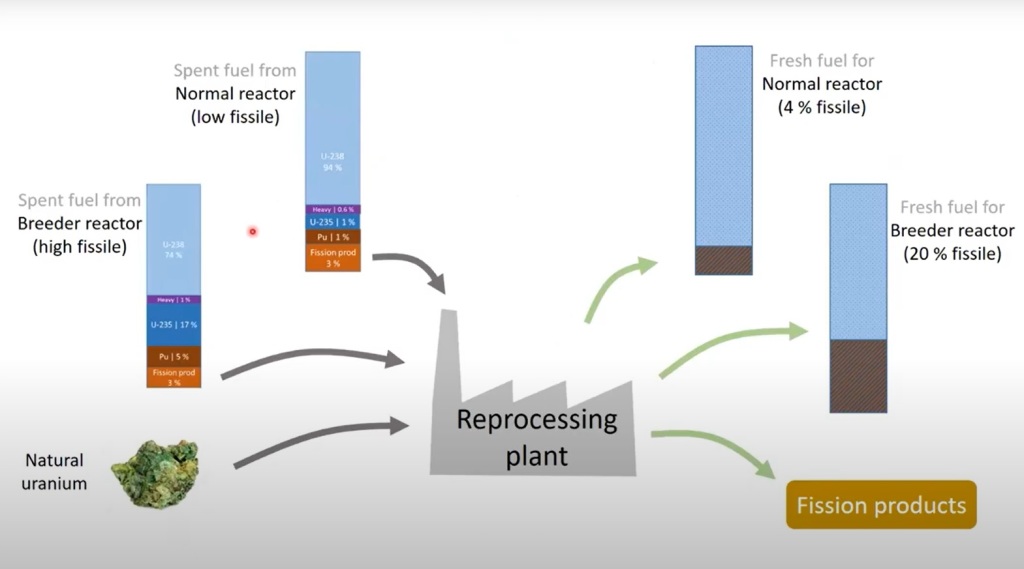

Using a reprocessing plant, we can mix the spent fuel of a breeder reactor with that from a normal reactor and create fresh fuel for both types of reactors [see image above]. In this cycle, the heavy isotopes never leave the cycle and thus are not waste products, they continue within the cycle until they are broken down to fissile fuel which can then be used. Thus the only waste product in such a system are the fission products which are much less radioactive.

There are many beneficial uses for fission products such as medical isotopes. We have enough uses for fission products that they need not be left stored somewhere but can be used to further benefit society.

However, this will certainly not be enough to assuage the fears of many on the safety of nuclear fission reactors, so let us take a look at this question of the nature of radioactivity and how it interacts with our world, with or without nuclear reactors.

First off, believe it or not, but the question of what level of radiation is safe vs. unsafe has never been clearly explained in media reportings. Most people don’t really know how to think about what is considered an unsafe level of radiation.

Does the average individual know how to think about the qualitative difference between 6 mSv vs. 50 mSv on human life or life in general? Or the effects on nature with such background levels of radiation? The answer is a resounding no. (We will come back to this question shortly)

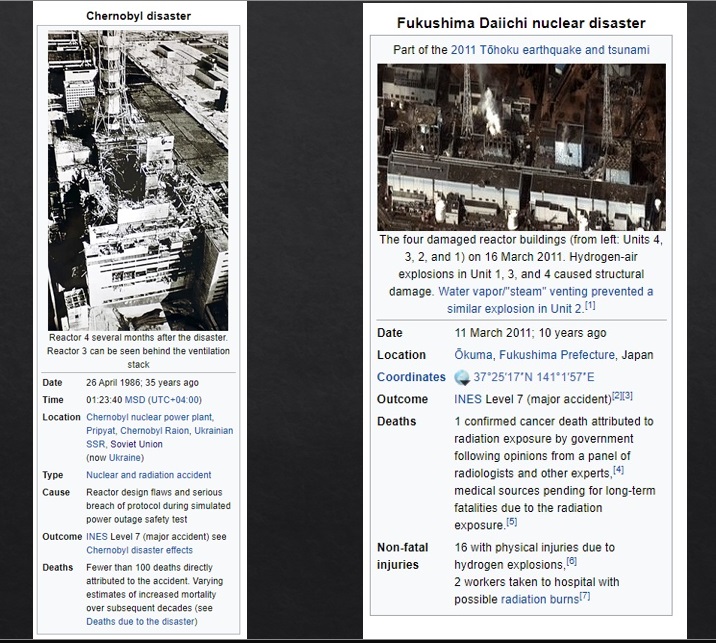

Much of what has fueled this fear of background radiation levels are the events of Chernobyl and Fukushima.

However, upon a closer inspection, we see that in the case of Chernobyl the number of deaths that were clearly attributed to Chernobyl were less than 100, which were directly related to the blast of the accident. In the case of Fukushima we see surprisingly only one confirmed death in relation to the incident, with only 16 non-fatal injuries.

So why has there been so much media sensation over the sheer horror caused by these two incidents? Well, this has been due to the estimates (yes , estimates, which even Wikipedia acknowledges) of how many deaths have been caused due to the long-term effects of these two blasts, namely in cancer deaths.

However, there is a very large problem with how these estimates are being calculated.

Once again, we see a linear extrapolation. In this case the linear non-threshold (LNT) model. How is LNT used to predict the number of deaths that will occur with a certain dose of radiation? Let us look at another example using a more familiar substance to gain a better idea.

Here is an example of the LNT logic with the substance Vodka. Let us say that a person has a 50% chance of dying if they drink a full 750 ml bottle of vodka within 30 minutes.

[Note: the number does not need to be exact but rather our understanding of how the logic is applied, we could change it to 15 minutes or 5 minutes, or 1L or 2L etc. it would not make a difference to how the logic will quickly become an absurdity when further applied.]

If one individual has a 50% chance of dying if they consume one 750 ml bottle in 30 minutes, two individuals that consume together two 750 ml bottles in 30 minutes should result in one death. Since 50% of two bottles drunk in 30 minutes equates to one death.

If ten individuals consume two bottles of 750 ml vodka in 30 minutes which is approximately 10% each, there should still be, according to the logic of LNT, one death that occurs since two bottles equates to one death.

If one hundred individuals were to consume two bottles of vodka in 30 min, which is approximately 1% each, there will still be one death that is predicted by the LNT model, since, you guessed it, two bottles of vodka consumed in 30 minutes will always, always equate to one death.

This is the present model being used to predict future cancer deaths due to radiation exposure. As we can clearly see when we look at the LNT logic using a more familiar substance such as Vodka, it is plain to see that this is very far from an exact science in predicting anything.

There is another issue, and that is, what are unsafe levels of radiation?

First, you may not know that there is a natural background of radiation that we have on Earth, and that it changes depending on several factors, which are naturally caused and not by human activity, as we will see.

Recall that we receive the rays of the sun as well as cosmic rays from our solar system and galaxy and likely beyond, that shape the radioactive environment surrounding and within Earth.

Levels of background radiation. Upper left picture: 2.20 mSv flying over the Pacific Ocean, bottom left picture: 30.99 mSv at a beach in Brazil, right picture: 0.30 mSv in New Hampshire, United States.

In the above pictures we clearly see an increase in background radiation than what is typically found in the metropolis cities that preceded. This is because for one thing, background radiation increases the higher your elevation. This makes sense when we again remind ourselves of the fact that we receive our radiation from primarily the sun and cosmic rays.

It has been observed in numerous medical studies that people who live at higher elevations tend to be in better health and live longer.

In the case of the beach in Brazil, as in the case with hot springs which are also highly radioactive compared to typical background radiation levels, these places tend to be recognized by the local community for its healing qualities. Many of the locals at the particular beach in Brazil, reported in the documentary Pandora’s Promise, cover their body with the sand since it has health benefits. This is not a unique phenomenon, there are many beaches throughout the world which are known for their sands having healing qualities.

What are we thus to think of radiation in this context? It appears that higher levels of radiation, up to 300x higher than we experience in metropolis background radiation levels, are actually a benefit to our bodies.

Background radiation levels. Upper left picture: 0.91 mSv village by Chernobyl, lower left picture: 3.67 mSv at the Chernobyl site, upper right image: 0.18 mSv village in Fukushima, bottom right picture: 0.70 mSv by a nuclear waste storage container.

If we were shown the above levels without any context, it would be easy to be concerned about the 3.67 mSv at the Chernobyl site as an indication of an ongoing danger. However, in context to what we just discussed in terms of natural background radiation levels, this does not look concerning at all.

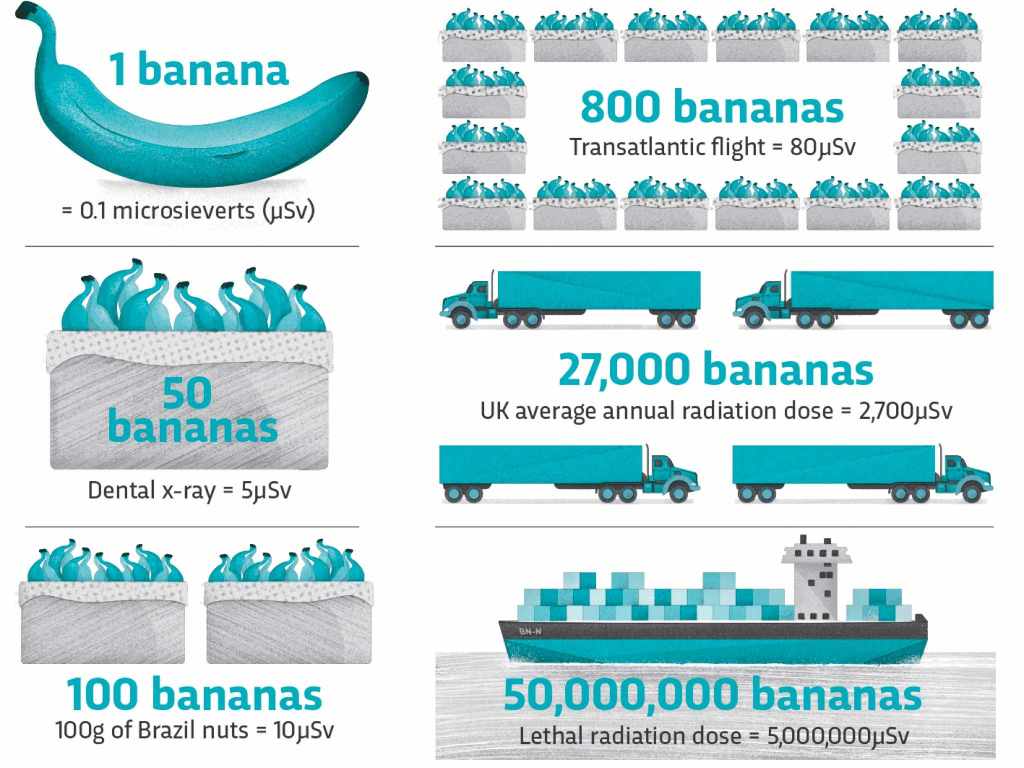

To further this point, let us look at an example of a popular food many of us consume on a somewhat regular basis, the banana!

Did you know that the banana is one of the most radioactive foods? (note: Brazilian nuts are even more radioactive).

As we can see with this wonderful graphic, if we were told that 5 million microSv of radioactive material sank in the ocean, you could imagine the sort of crazed media sensation that would occur throughout the world and even whole cities evacuated. However, if we were rather told that simply 50 million bananas sank in the ocean, no one would bat an eye.

Below is another great graphic to give you an idea of how much radiation exposure an individual receives in different scenarios in terms of bananas.

In addition, it should be noted that it is officially recognized that nuclear power is among the safest energy source in the world, on par with hydropower, wind and solar.

Whatever chart you look at, the consensus is the same, nuclear is just as low in deaths as solar and wind and depending on the chart you are looking at the lowest.

In addition, the material required to produce, solar, hydro, wind and geothermal all greatly surpass the material required to build a nuclear plant (but especially solar which requires very toxic materials for its production and there is no efficient manner to dispose of these toxic panels once no longer in use). In fact, the material required to build solar panels for instance, can only be generated from forms of energy other than solar and wind. This is possibly the greatest contradiction to the thought that solar and wind are capable of replacing all energy forms, since they do not even have the capability of producing their required parts.

If the social progress index is undoubtedly linked to energy production of a country, and nuclear fission is safe and clean, why is there suppression of nuclear power when it has the capability of massively increasing the standard of living throughout the world? Well, as with all things having to do with big ideas, there is the matter of politics. And if politics has decided that industrial growth is not a good thing, well, that means nuclear power is also not a good thing.

This is especially relevant in understanding why nuclear fusion, by far the cleanest and safest form of energy we know we are capable of in the near future, unlike fission, has absolutely no risk of a meltdown since it operates differently from nuclear fission reactors and produces absolutely no waste.

It also has the capability of producing energy that is about 1000x greater than what we are presently capable of with nuclear fission.

So why don’t we have nuclear fusion yet?

As we can see with the above graph, discussion over fusion power has been occurring for quite some time. What was also noted in 1976 studies, were the levels of funding that would be required for fusion to be made possible. As we can see, we have only ever been slightly above the “fusion never” level and since the mid 80s have been well below this level.

Fusion power is a challenging endeavor, which not only needs the support of funding for its materials, but also will likely require, as with all great science, international collaboration, since many great minds always work better than one.

If you think that we do not have the means for this funding perhaps you should take a look at the graph below.

Source: Jason Ross, Schiller Institute

As you can see, the money is there and considering what Europe is presently going through with its energy crisis, I would put forward that only investing in solar and wind is not a stable provider of energy for the people. Just like a farmer doesn’t grow just one type of crop, energy forms that are highly affected by weather are not a reliable primary source of energy for large populations.

Thus the reason why nuclear has not become the leading energy source is a political reason. The reason why we have energy shortages presently in localized regions is also political. It is not due to a scarcity of resources and we will not be even close to reaching any limits in the next one hundred years.

However, it is a valid question to ask, what does our long term future look like here on earth if we transfer over to nuclear power, and eventually fusion power. Will there be a point where we do hit a critical carrying capacity plateau?

Well one very exciting prospect with nuclear fusion is the plasma torch, which has the capability of turning landfills into resource mines.

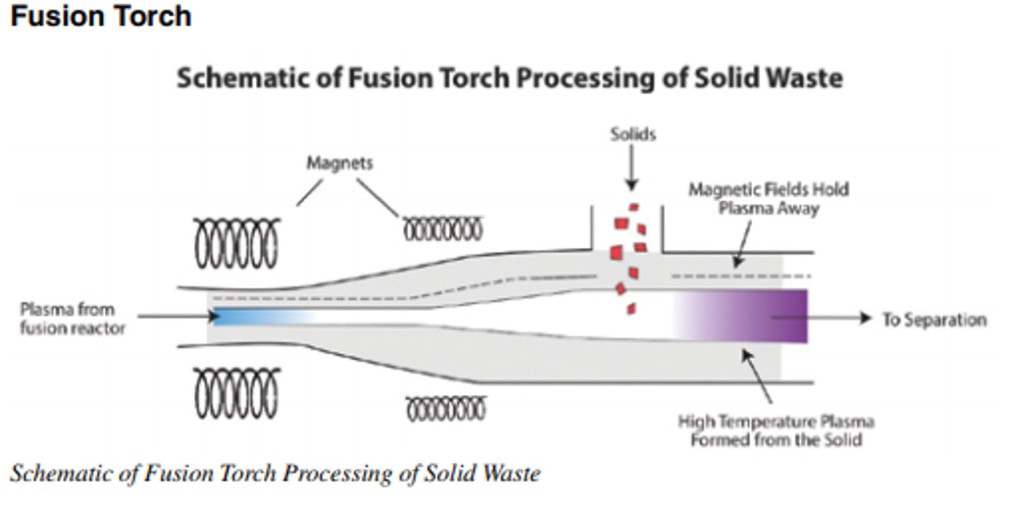

The next domain for the future in industry which will completely revolutionise our relationship to resources and waste is located in low and high temperature plasma processes, which will dramatically increase the productivity of steel, iron, titanium and any other metal resources needed for a modern society.

The plasma torch functions by injecting gas into the chamber, the electric discharge traveling from the negatively charged cathode to the positively charged anode heats up with the resistance from the gas to such a high temperature that ionization occurs, which can reach temperatures of 15,000 degrees Celsius, which can reduce any material to its elemental components.

The next step in more powerful and efficient plasma processing will be in fusion plasma torches which will open up a new realm of possibilities in the degree of precision in which we can transform energy and matter.

Contents put into the fusion torch are shock vapourised and become part of the plasma as separate ionised elements and electrons. Once in a plasma state various methods can be used to select the desired elements and isotopes based on their atomic as opposed to their chemical properties, allowing for the formation of very specific chemical compounds creating batches of very pure chemical structures tailored down to the isotopic level.

This will allow for the formation of more advanced materials then we currently can produce, and which are presently impossible to create with lower energy yield technology.

Just to give the reader an indication of how plentiful resources will be with the plasma torch technology, within an average cubic mile of just plain dirt, mining of iron could be increased by 8x, aluminum by 200x, tin by 100x, and zinc by 6x beyond the present annual US production. This will not be necessary, but showcases how abundant resources are just within the United States.

Source: All the World’s a Mine video

Source: All the World’s a Mine video

With plasma torch technology, the pure elements can either be safely released into their environment, as is the case for nitrogen or oxygen, or they can be totally recycled as in industrial raw materials, such that absolutely ALL POLLUTION IS THUS ELIMINATED.

The plasma torch technology has been commercialised for decades but it is not in widespread use due to its large use in electricity, which makes it currently very expensive. Only military bases and some specialised industries use it presently.

However, with fusion technology, electricity for nations will become the cheapest it has ever been, making it economically viable for all industrial waste, industrial pollution, landfills, and garbage islands in the ocean to be recycled into resources that can be used once again for commercial and industrial needs.

Suffice to say that the plasma torch technology will not only get rid of all landfills in a clean and sustainable manner, but it also means that there will be no such thing as waste anymore. Since everything we use, can be used over and over again as a resource with no end. Even the material of old, out of date infrastructure can now be reused, once broken down to its elemental components, to supply improved material for new infrastructure. Wood burning and fossil fuels will no longer be needed.

Water canals, nuclear plants and high speed rail all need a lot of steel, much higher than what is our current capacity to produce worldwide.

In a fusion economy there will no longer be such a thing as limited resources, and zero-sum artificial restrictions will cease to exist. There will be enough not only to support a growing population on Earth, but to support populations that in the future will inhabit the surface of the Moon and Mars.

Fusion will also produce the rockets we require in order to travel back and forth to Mars in a timely fashion.

In a fusion economy, the future will look towards the existence of yet-to-be created potential.

The greatest good for the greatest possible number will become a reality. Resource wars and economic competition over limited resources will no longer be and cooperation towards universally beneficial projects not only cross continental, but interplanetary will become the new norm.

Plan for Tianjin, China. Example of how future cities can be designed throughout the world.

I will end here with what China is presently working on which is the Tiangong (meaning Heavenly Palace) space station. It has been decided that the International Space Station (ISS) is no longer to be maintained and will cease to be operational by sometime in the year 2024. China, who has been banned from participating with the ISS, due to the decision of President Obama in 2011, have had no choice but to work on their own space station, and the timing could not be more apt.

By the year 2024, Tiangong will be the only operational space station, and China has made it clear that all countries are welcome to use this space station for their research needs. It should also be noted, that although Tiangong is much smaller than the ISS, it is built in such a way that pieces can always be added on, such that it can grow much larger in size, depending on whether there are enough countries interested, which I think will most certainly be the case.

Tiangong, Heavenly Palace Chinese Space Station

On Sept 29th, 2011 China launched its first space laboratory Tiangong-1, followed by a more advanced space laboratory Tiangong-2 on Sept 15th, 2016. The core module, named Tianhe (meaning Harmony of the Heavens), was launched on April 29th, 2021 marking the start of the Tiangong Space program.

This past mid-October, China launched their second of four crewed missions, Shenzhou-13 to continue to build the Tiangong space station, which is scheduled to be completed by the end of 2022. During the first crewed mission which concluded this past September, three taikonauts had stayed on Tianhe for 90 days.

This second crewed mission is expected to stay in orbit for six months.

Chinese taikonauts on second crewed mission to the Tiangong space station, from left to right: Zhai Zhigang mission commander, Wang Yaping and Ye Guangfu.

This is a great reminder to all of us that we really don’t have limits to growth, as long as we are inspired to create, by challenging what is deemed impossible and showing that it is indeed very possible. There are no set limits, there are no set parameters, since our mind has the capability to always set the boundary condition further and further beyond.

Our growth and development does not need to be a negative thing, it can be a very positive thing, in harmony with nature, with the universe, and we can have an optimistic future if we set our course towards that bright star.

*

Note to readers: Please click the share buttons above or below. Follow us on Instagram, @crg_globalresearch. Forward this article to your email lists. Crosspost on your blog site, internet forums. etc.

Featured image: Europe at night viewed from space with city lights showing human activity in Germany, France, Spain, Italy and other countries, 3d rendering of planet Earth, elements from NASA