Psychology: Pretending There Is Nothing Wrong

Part 20 of the 'elephants in the room' series

All Global Research articles can be read in 27 languages by activating the “Translate Website” drop down menu on the top banner of our home page (Desktop version).

***

“One of the saddest lessons of history is this: If we’ve been bamboozled long enough, we tend to reject any evidence of the bamboozle. We’re no longer interested in finding out the truth. The bamboozle has captured us. It’s simply too painful to acknowledge, even to ourselves, that we’ve been taken. Once you give a charlatan power over you, you almost never get it back.”(1)

In 2011 there was a devastating nuclear meltdown at the Fukushima Nuclear Power plant in Japan. An “investigation” concluded that:

“its fundamental causes are to be found in the ingrained conventions of Japanese culture, our reflexive obedience, our reluctance to question authority, our devotion to sticking with the programme”.(2)

However, there is nothing specifically Japanese about these attitudes. A similar mindset explains many of the issues discussed in other posts. This post is the first of two giving some insights into why we behave as we do. Why do powerful people in governments and corporations commit so many crimes? Why do people tolerate a government that commits war crimes? Why do people turn a blind eye when large corporations repeatedly carry out unethical activities? Why is it we are so easily misled?

Do We Want To Know The Truth? Denial and Self-deception

Stanley Cohen wrote a book entitled ‘States of Denial’, which looked at why whole populations allow their governments to commit atrocities. He described a mindset which was ‘simultaneously knowing and not-knowing’. We partially know something, but we do not want to discover the rest, due to a fear of discovering something troubling.(3) The human brain is capable of great insights and independent thought, but we have conditioned ourselves not to ask too many difficult questions, because we are afraid of the answers. We are vaguely aware that we choose not to look at the facts, without really understanding what it is we are evading.

This is usually called ‘wilful blindness’ or ‘denial’. It covers a huge range of phenomena, from governments committing atrocities, and companies behaving unethically, to individuals trying to convince themselves that their partners are not having affairs. If we commit or witness harmful practices, we try to deceive ourselves into believing that they were reasonable. We come up with justifications, we use euphemisms to avoid accurate descriptions, and we ignore the consequences.(4) This form of self-deception allows individuals, organisations and even whole countries to deny knowledge of things that make them uncomfortable.

Numerous government and corporate employees who have participated in unethical behaviour have admitted afterwards that they knew their actions were wrong, but managed to convince themselves that the normal rules did not apply. Most people in Britain and the US, including journalists, have some understanding that the destruction of Iraq and Libya are monstrous crimes, but they are so uncomfortable admitting this that they delude themselves into believing it is not true.

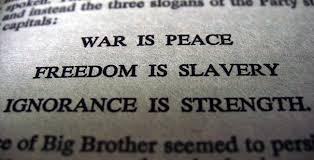

Brainwashing – We are lied to every day

The more people are exposed to an idea, the more they are likely to accept it. If we hear the same information from the media over and over again, we come to believe it must be true. If we are also surrounded by friends, family and colleagues repeating the same perspective, because they have also been bombarded by the same misleading information from the media, then it becomes harder and harder to say it is not true.

Convincing people to believe distorted versions of events is a form of brainwashing. Every soldier in history who has killed people in another country has been brainwashed to some extent. They are led to believe that shooting people, dropping bombs on them or burying them alive beneath a tank is reasonable, even if the people being killed are trying to surrender. Ordinary people who support these policies have also been brainwashed to accept these policies. This is connected to another aspect of psychology known as dehumanisation – the ability to see others as less than human.(5) This plays an important part in enabling governments to get away with war crimes overseas, because the people being slaughtered, such as Muslims in the Middle East, are different from us.

Confirmation Bias and a ‘Framework of Understanding’

Psychologists have recognised that our beliefs are an important part of how we see ourselves. We prefer to receive information that confirms our existing views or beliefs, because it makes us feel good about ourselves. Psychologists use the term ‘cognitive dissonance’ to describe a situation where people feel uncomfortable because they are presented with evidence that contradicts their existing beliefs. We try to find ways to deal with this discomfort, either by ignoring the information, or by using faulty logic to justify our existing beliefs. This is known as ‘confirmation bias’,(6) and is often divided into three main areas.

Firstly, biased search is where we actively seek out information that supports our existing views. Most newspaper readers will be aware that they choose a newspaper where the writers express similar views to their own.

Secondly, biased interpretation is where we interpret ambiguous evidence as supporting our existing position. We also find reasons to dismiss evidence that contradicts our beliefs, by convincing ourselves that the source was unreliable.

Finally, biased memory is where we remember information that supports our existing beliefs, and forget information that contradicts them. Over time, most people forget the detail of what they have learned. They create a framework of understanding, or a framework of knowledge. This is like a general overview of how we see the world. If new information is consistent with this framework, it fits into the framework easily, reinforces the framework, and might be remembered. If new information does not fit easily into the framework then we don’t know what to do with it, so it will tend to be dismissed and quickly forgotten.

Affection for beliefs seems to be similar to affection for people. Recent research has shown that some parts of the brain are de-activated when thinking about people we love. In particular, some of the areas responsible for critical thinking. The same appears to be true when thinking about beliefs.(7) Our brain treats differently any information that might challenge our beliefs. The effect seems to be stronger for emotionally charged issues and for deeply entrenched beliefs. This is particularly the case where people believe in a powerful ideology, or a big idea, such as ‘markets’. We are mostly unaware of how deeply these big ideas affect the way we think. Confirmation bias can lead to a situation where people will continue to believe something, even when it is strongly contradicted by the evidence.(8)

Ideas seem ‘normal’ if lots of other people share them – even if they’re wrong

If a minority of people hold an unusual view, such as ‘the world is coming to end’, they seem weird to the majority. But when ideas are widely shared they seem less weird, and may even come to seem normal. This was evident during the run-up to the financial crisis in 2008. Belief in ‘the markets’ had become ever more reinforced, with few people openly questioning them. Most people, including supposed experts, chose to overlook the well-established downsides of financial deregulation, such as catastrophic crashes.

This is partly because people’s beliefs tend to develop over time. Whilst these beliefs are being formed, they can be changed, but once they have become established it becomes more difficult to change them. In particular, if someone has stated their opinion, they feel that changing their opinion appears weak.(9) Eventually they feel they have too much to lose, and it becomes almost impossible to change their views. If a journalist has made a living openly supporting invasions of other countries, it is very difficult for them to admit that they have been supporting the worst crimes of this century.

This is particularly relevant to the belief that the people we have elected to run our country are reasonable people with good intentions. We want to believe that they are not insane, war-mongering sociopaths, so we come up with all manner of explanations for their crimes. If they kill two million people in Iraq, we convince ourselves that their explanation, that they were worried about WMD, or terrorism, or human rights, must be correct. Even though, deep down, we know these are lies.

Destructive Obedience – Causing Harm by Following Orders

Some famous experiments have focussed on the role of obedience to authority. The best known of these were initially performed by Stanley Milgram in 1963.(10) These studies examined why individuals obey authorities even when the task is morally repugnant, when there is no reward for doing so, and where there is no punishment for disobedience. In the experiment people were told to give severe electric shocks to other people. The studies found that many people will obey those orders. Variations of the experiments have been performed around the world, to see if the results are true in other countries. Whilst the details sometimes vary, the general principle that many people will do terrible things when following orders appears to be true.(11)

Similar studies have been tried in the real world, where nurses were observed to see if they would administer lethal doses of drugs on the order of a doctor. Again, perhaps surprisingly, very few questioned the instruction.(12) Non-experimental real world evidence of these effects is widespread. It is thought that a quarter of plane crashes are caused by ‘destructive obedience’(13) and there is a famous incident of battleships crashing because no one questioned their orders.(14)

This obedience without question creates repeated problems in many industries. Safety failings due to cost cutting are a common problem, even where people know that the cuts would create danger. This creates a situation where ethics, legality and safety become irrelevant due to orders from bosses. Numerous oil leaks and gas explosions, such as the Texas City oil refinery or the Deepwater Horizon oil rig, have occurred because of cost-savings and cutting corners on safety standards.(15)

Conformity and The Disappearance of Ethics

Conformity is where people try to fit in with those around them, to conform.(16) The most well-known experiments in this area were carried out by Solomon Asch in 1951.(17) Subjects were placed in groups that had to do simple tasks, such as counting the number of chimes of a bell. Unknown to the subject, all other members of the group were ‘in’ on the experiment, and had been instructed to state the wrong number of chimes. Overwhelmingly, subjects agreed with the rest of the group, rather than stating the correct number. It turns out that under social pressure most of us would rather be wrong than alone. Independence of mind can lead to a sense of isolation, and affect our self-esteem, so we try to protect ourselves by fitting-in with the group.(18) This type of research has been consistently repeated. One of the most interesting findings is that some participants have no sense of having conformed – it is completely subconscious.(19)

Conformity may explain a number of phenomena that have been observed. In particular, researchers have noted groupthink, where the desire for harmony within a group overrides an honest discussion of alternatives. People are reluctant to suggest dissenting opinions because of their desire to conform. Attitude polarisation is where groups of like-minded thinkers tend to develop even more extreme views. It seems highly likely that both of these are at work when groups of politicians pursue extremist foreign policies such as war. Leaders appoint like-minded thinkers as advisors, and people with challenging opinions tend to be excluded from decisionmaking.

Real-world evidence of conformity also suggests that individual ethical standards decrease in groups. Young medical students are unlikely to blow the whistle if they see something unethical. However, research indicates that they are even less likely to blow the whistle after 3 years of medical and ethics training.(20) Doctors are reluctant to challenge their colleagues, even where evidence of incompetence leading to death is clear.(21) Many people involved in financial activities in the boom years prior to the global financial crisis of 2007 have explained that there was no clear sense of moral norms. If everyone around you is being rewarded for doing crooked deals, what is normal? If companies are spending lots of money lobbying politicians to change laws, this makes them believe that laws do not have to be taken seriously, they are merely obstacles to be circumvented.(22)

Obedience and Conformity are a dangerous combination

In many workplaces, both obedience and conformity are present. Staff obey their superiors, but they also want to conform to fit in with their colleagues. In practice, standing up against consensus is difficult for numerous reasons.(23) Most obviously, many of the systems that we currently have in place provide no encouragement or tangible rewards for bucking the system or challenging decisions. In fact, just the opposite is true. Questioning your colleagues, and particularly your superiors, can have a negative impact on your job, your reputation, your career prospects and your financial rewards.

*

Note to readers: please click the share buttons above or below. Forward this article to your email lists. Crosspost on your blog site, internet forums. etc.

This article was first posted at medium.com/elephantsintheroom

Rod Driver is a part-time academic who is particularly interested in de-bunking modern-day US and British propaganda. This is the twentieth in a series entitled Elephants In The Room, which attempts to provide a beginners guide to understanding what’s really going on in relation to war, terrorism, economics and poverty, without the nonsense in the mainstream media.

Notes

1) The Demon-Haunted World: Science as a Candle in the Dark, 1995

2) Justin McCurry, ‘Japanese cultural traits ‘at heart of Fukushima disaster’’, Guardian, 5 July 2012, at https://www.theguardian.com/world/2012/jul/05/japanese-cultural-traits-fukushima-disaster

3) Stanley Cohen, States of Denial, 2001, pp. 24 – 33

4) Margaret Heffernan, Wilful Blindness: Why we ignore the obvious at our peril, 2011, pp.258-259

5) https://en.wikipedia.org/wiki/Dehumanization

6) https://en.wikipedia.org/wiki/Confirmation_bias

7) Margaret Heffernan, Wilful Blindness: Why we ignore the obvious at our peril, 2011, pp. 45-46

8) Lee Ross and Craig Anderson, ‘Judgement under uncertainty: Heuristics and biases’, 1974, Science 185 (4157): 1124-31

9) Margaret Heffernan, Wilful Blindness: Why we ignore the obvious at our peril, 2011, 316

10) http://en.wikipedia.org/wiki/Stanley_Milgram

11) Brian Resnick, ‘The Stanford prison experiment was massively influential. We just learned it was a fraud’, Vox, 13 June 2018, at https://www.vox.com/2018/6/13/17449118/stanford-prison-experiment-fraud-psychology-replication

12) https://en.wikipedia.org/wiki/Hofling_hospital_experiment

C.K.Hofling et al, ‘An experimental study in nurse-physician relationships’, Journal of nervous and mental disease, 143(2): 171-80, at https://pubmed.ncbi.nlm.nih.gov/5957275/

13) Eugen Tarnow, ‘Self-destructive obedience in the airplane cockpit and the concept of obedience optimisation’, in Thomas Blass (ed.) Obedience to Authority: Current perspectives on the Milgram paradigm, 2000, at https://www.taylorfrancis.com/chapters/self-destructive-obedience-airplane-cockpit-concept-obedience-optimization-eugen-tarnow/e/10.4324/9781410602022-11

14) https://en.wikipedia.org/wiki/HMS_Victoria_(1887)

15) http://en.wikipedia.org/wiki/Texas_City_Refinery_explosion

https://en.wikipedia.org/wiki/Deepwater_Horizon_oil_spill

16) https://en.wikipedia.org/wiki/Conformity

17) Asch, S.E., ‘Opinions and Social Pressure’, Scientific American, 1955, 193(5) pp.3-5

18) Margaret Heffernan, Wilful Blindness: Why we ignore the obvious at our peril, 2011, p.296

19) G. Berns et al, ‘Neurobiological correlates of Social Conformity and Independence During mental Rotation’, Journal of Biological Psychiatry, no.58, pp.245-253

20) J. Goldie et al, ‘Students attitudes and potential behaviour with regard to whistle blowing as they pass through a modern medical curriculum’, Medical Education, 37, pp.368-375, at https://pubmed.ncbi.nlm.nih.gov/12654122/

21) ‘Learning from Bristol: Report of the Public Inquiry into children’s heart surgery at the Bristol Royal Infirmary 1984-1995’ (also known as The Kennedy Report), July 2001, at http://www.wales.nhs.uk/sites3/documents/441/The%20Kennedy%20Report.pdf

22) Margaret Heffernan, Wilful Blindness: Why we ignore the obvious at our peril, 2011, 202

23) Stanley Cohen, States of Denial: Knowing about atrocities and suffering, 2001, p.19

Featured image is from New Forest Advisory